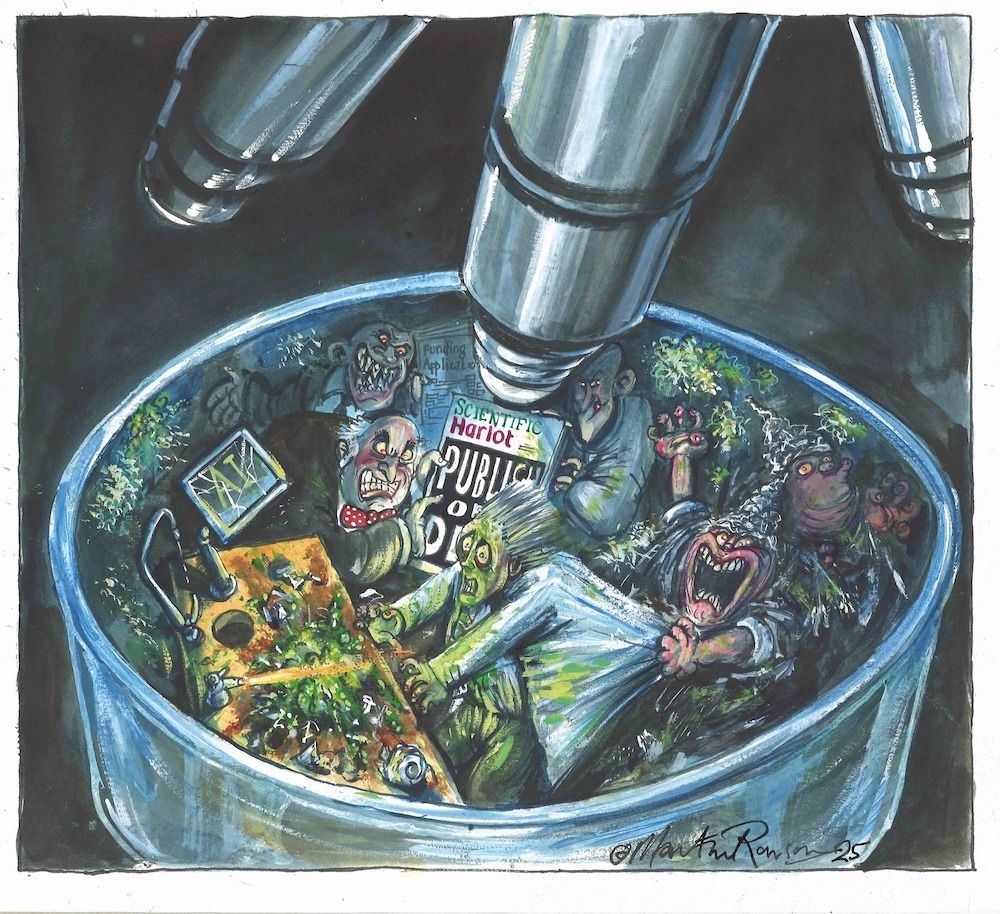

Why are so many scientific studies impossible to replicate? Meet the brave researchers bringing the truth to light

Twenty years ago, John P. A. Ioannidis published an essay with the eye-catching title “Why Most Published Research Findings Are False”. It has since been accessed millions of times and accumulated almost 15,000 citations. Its publication was a singular moment in the developing field of metascience – the application of science to science itself. It’s a field that is attracting a great deal of interest, with the UK opening its first metascience unit last year. So what is metascience, and how can we grapple with the problems it presents?

Ioannidis, a professor at Stanford University, is a leading meta-researcher. For the last two decades, he has studied the methods, practices, reporting standards and incentives at work within the global scientific community. His findings on the integrity of science can be boiled down to some basic principles around the conditions likely to produce false results. Firstly, small studies and small effect sizes can be problematic as they lack the necessary scale. Second, and more controversially, the greater the flexibility in the study design the greater the chance of a false result. Then there’s a third major principle: the greater the financial interest or other prejudice, the greater the chance of erroneous results. Even in science, with the purest of intentions, we all bring our biases to the party.

These three principles alone add up to a heady brew. In his paper, Ioannidis asserted that, overall, there is a lot of research which should be handled with care. He wasn’t interested in kicking individual papers but recognising the room for error and working with the “totality of the evidence”. Metascience didn’t start with Ioannidis, but his essay threw some grit into the science gears. By the time the 2010s rolled around, criticism of the integrity of scientific research was gathering pace, and talk of a “replication” or “reproducibility” crisis was bubbling to the surface.

When a researcher runs the same experiment twice, using the same data both times, they should get the same reproducible results. If someone else runs the same experiment, as described by the original researchers, that should also result in replication. But in the 2010s there was a growing realisation that a grievous number of published studies simply can’t be replicated. Some studies put the replication rates for psychological science, a field hard hit by the crisis, as low as 39 per cent.

These were difficult years, as the research community came to terms with Ioannidis being proven right. Given that science depends on reliable studies, the community was thrust into an existential crisis.

Questionable practices, nonsense results

The various ways in which the practice of science can be distorted cover a wide spectrum. Often they relate to the importance of the “probability value”, or p-value. Any researcher that crunches numbers will appreciate the tyranny of p-values. A low p-value indicates that a study is reliable, or what we scientists call “statistically significant”. In general, a p-value of 0.05 or smaller is regarded as being one indicator of an important finding. But this threshold is completely arbitrary – at some point, science just kind of settled on this figure. It has created a hard line that has given the p-value a dark alter-ego and led to distortions that undermine scientific integrity. One example is data dredging, where repeated statistical analyses are performed until a result with a statistically significant p-value is obtained. This and other techniques fall under the heading of “p-hacking” – a term coined in 2014 by three American researchers, Joseph P. Simmons, Leif D. Nelson and Uri Simonsohn.

Some evidence suggests that p-hacking is, frankly, rife. The various problematic techniques have been captured in the notion of “questionable research practices”. One Danish study published last year in the open access journal PLOS One surveyed researchers across all major disciplines in Denmark, the US and several European countries. It found the self-reported use of some questionable research practices to be over 40 per cent. Almost half of researchers admitted to indulging in HARKing (hypothesising after the results are known). In good practice, a scientific study should set out to prove or disprove its original hypothesis, which remains unchanged. But when researchers find that the data does not sufficiently support their hypothesis, they might tweak it in retrospect, in order to produce those all-important “significant results”.

These distortions may seem mild, and they have to some extent been normalised – inducing nothing more than a shrug from even seasoned academics. Yet the cumulative impact on research is parlous. In a brutal critique entitled “False-Positive Psychology” and published in 2011, Simmons, Nelson and Simonsohn deployed just a few questionable research practices to produce a nonsensical result: their research claimed to show that study participants became younger simply by listening to the Beatles song “When I’m Sixty-Four”. They sought to demonstrate how absurd and potentially dangerous results could be quite easily obtained. As they wrote in their paper: “Everyone knew it [the use of questionable research practices] was wrong, but they thought it was wrong the way it’s wrong to jaywalk. We decided to write ‘False-Positive Psychology’ when simulations revealed it was wrong the way it’s wrong to rob a bank.”

Flaws, fraud and fakery

So why aren’t these questionable practices being taken more seriously? This may be in part because the research community has plenty more problems to worry about, especially as higher education institutions are squeezed. Concerns around academic journals are not new and the charge sheet is long. The peer review process is flawed. There is publication bias, where only the most novel positive findings get published, which distorts the evidence base as papers that find “no effect” don’t make it into print. The funding model is a car crash and has been captured by corporate agendas, while conflicts of interest abound. Researchers sometimes feel as though they are trapped in an abusive relationship, as they are overly reliant on big, profit-making journals. One of these, Elsevier, made a profit of £1.17 billion in 2024, with an astonishing operating margin of 38.4 per cent. Numbers like these are particularly galling as researchers scrabble for grant funding and provide free peer review and editorial labour, only to find that key academic papers are marooned behind paywalls.

There has been some positive change of late with the expansion of open-access publication, where research papers are no longer paywalled and can be downloaded by anyone. Many research funders – such as the National Institute for Health and Care Research, UK Research and Innovation, Wellcome, and research charities such as Cancer Research UK – now mandate that research is openly available to all. Yet this hasn’t loosened the grip of the big publishing houses, or lessened their profits.

Meanwhile, new problems have emerged. One of these is the rise of paper mills. The Committee of Publication Ethics describes them as “profit oriented, unofficial and potentially illegal organisations that produce and sell fraudulent manuscripts that seem to resemble genuine research”. In the uber-competitive world of academia, it is unsurprising that some people will pay cash to illegally get into journals. In some countries, doctoral students cannot graduate unless they publish a paper, and clinicians in hospitals are also required to publish to get promotion. Up until 2020, Chinese academic institutions were offering cash rewards for publication. The paper mills write the paper – using manipulated or fake data – and even handle the submission to the journal. They’ve been operating for years, but the more recent advent of AI is making fraudulent papers easier to produce and harder for editors to detect. The word “pollution” now comes up regularly in discussions about the overall state of research literature.

Meta-researchers to the rescue?

Professor Ioannidis is one of the best-known meta-researchers, but many more have since joined the ranks. They are having some success in raising awareness and pushing back against the problems, although their influence is limited as they are often acting outside of the usual academic and regulatory structures.

Dorothy Bishop is one such science sleuth. A singular academic, Bishop was professor of developmental neuropsychology at Oxford until she retired in 2022, and continues to write a blog mostly dealing with distortions in scientific research and publishing. Her first post in 2010 considered academic misconduct in relation to a paper that was, improbably, about fellatio in fruit bats. Writing in Nature in 2019, at the end of a four-decade long career, she lamented the failure to address some of the weaknesses in the execution of the scientific method, pithily framing the problem as the “four horsemen of the irreproducibility apocalypse”: publication bias, low statistical power, p-value hacking and HARKing.

Bishop made headlines last year when she resigned from the Royal Society in response to the conduct of Elon Musk, a fellow since 2018, calling him “someone who appears to be modeling himself on a Bond villain, a man who has immeasurable wealth and power which he will use to threaten scientists who disagree with him…” Her name has become associated with scientific integrity.

Many of the other science sleuths are younger researchers, highly motivated, or as Bishop put it, “they’re pretty obsessive and they care passionately about it.” Their activities are often reported on websites such as Retraction Watch and Data Colada, though the risks are not insignificant. Data Colada, run by none other than Simmons, Nelson and Simonsohn, was sued by the researcher Francesca Gino after allegations of misconduct at Harvard. Individuals, particularly early-career researchers who already face academic precarity, have to contend with formidable, potentially career-ending consequences when they choose to expose research that falls short.

As Bishop said, “we are going to need to have something more formalised.” There are some early signs of progress. Retraction Watch has appointed “sleuths in residence” and recently announced a new project, the Medical Evidence Project, funded by their parent non-profit, The Center for Scientific Integrity. Using a $900,000 grant from Open Philanthropy, it plans to use forensic metascience to identify problems in articles that could affect human health.

The new UK Metascience Unit, run by UK Research and Innovation and the Department for Science, Innovation and Technology, is the first metascience unit in the world to be embedded in government. It has been given an initial budget of £10 million, in acknowledgement that there are billions of pounds of public money invested in science research and it therefore deserves rigorous study.

The sleuths are often interested in identifying and exposing research fraud at the more egregious end of the spectrum, but there are many more mundane changes that could improve scientific integrity. Much of the structural change proposed hinges around a move towards “open science” and has created a global movement, led by organisations such as the Center for Open Science in the United States. This is more than the simple expedient of publishing open access papers, important as that is, but reflects a much deeper cultural change to be fully open and transparent throughout the research process. For instance, datasets should, where possible, be made available to all so that claims can be checked and they are then available to facilitate additional research.

The open science movement

Many proponents advocate for “open scholarship” and the need to embed these practices in early career training. As part of this approach, there are calls for much greater use of pre-registration, where proposed research is set out in detail before it is conducted. This addresses some of the problems of post-hoc tweaking and twiddling to get positive results. Professor Simine Vazire, a psychology professor at Melbourne University with a specialism in meta-research, has described the open science movement as a “credibility revolution”.

These calls for change have been framed as part of a broader “slow science” movement, with aims to subvert the “publish or perish” culture in academia. Many would argue this is long overdue – it is difficult to understate how hyper-competitive academia is in some places, with inevitable unintended consequences. However, despite the efforts of sleuths and activists, not everyone is pushing for change, as Bishop pointed out: “There’s rather few really senior people that have embraced all the open science reproducibility sort of stuff.”

There are also anxieties that being seen to criticise science plays into the hands of bad actors. In May, President Donald Trump issued an executive order, “Restoring Gold Standard Science”. It set out a series of largely uncontroversial principles about the conduct of science, familiar to meta-researchers. However it was criticised by many, including the Center for Open Science, for attempting to politicise science. They pointed out that “improving openness, integrity, and reproducibility of research is an iterative, never-ending process for the scientific community” and argued that the order opened a path for evidence to be suppressed according to political ideology. Carl Bergstrom, a metascientist and professor of biology at Washington State, posted on Bluesky: “I’ve worried for years that by promoting a science-in-crisis narrative, the meta-science/science reform movement runs the risk of playing useful idiot to those who would like to reduce the influence of science in policy and regulation.”

Then there is the highly polarising figure of Robert F. Kennedy Jr., the secretary of health and human services, known for his promotion of vaccine misinformation and conspiracy theories. Not long after Trump’s executive order, RFK Jr suggested to American government scientists that they should refrain from publishing in three of the world’s leading medical journals, branding them “corrupt”.

Say it quietly, but some meta-researchers might be inclined to agree on some level, though for very different reasons and from a very different ideological position. Those meta-researchers are more likely to side with Ioannidis, who, when asked about the potential denigration of science for political ends, said: “Political noise is sad, and I feel strongly about protecting the independence of science from political interference, no matter where that comes from.”

One of the difficulties with the 2005 Ioannidis paper is that the distance from it to the cynical view that “all science is rubbish” seems, on first blush, to be small. Yet there is a gulf between them. Ioannidis pointed out that certain design features increase the risk of research being incorrect, but at no point has he suggested we abandon the scientific method. There is a need for corrective action and this will need to be done in the glare of some hostile politics. But as Bishop put it: “It’s not that science is in crisis so much as science is being not properly done, and that is creating a crisis.”